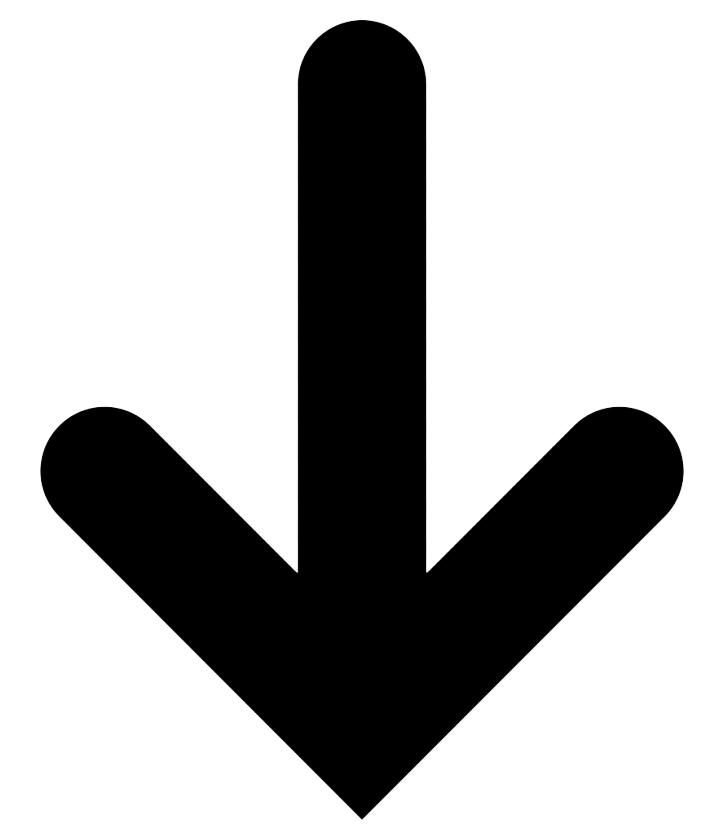

Why existing solutions fail:

- Chat-only interfaces assume everyone is a prompt engineer.

- Parameter dashboards assume everyone wants to tinker.

- Ignoring how humans actually work: set preferences once, adjust in the moment, refine after seeing results.

GRANULAR CONTROL

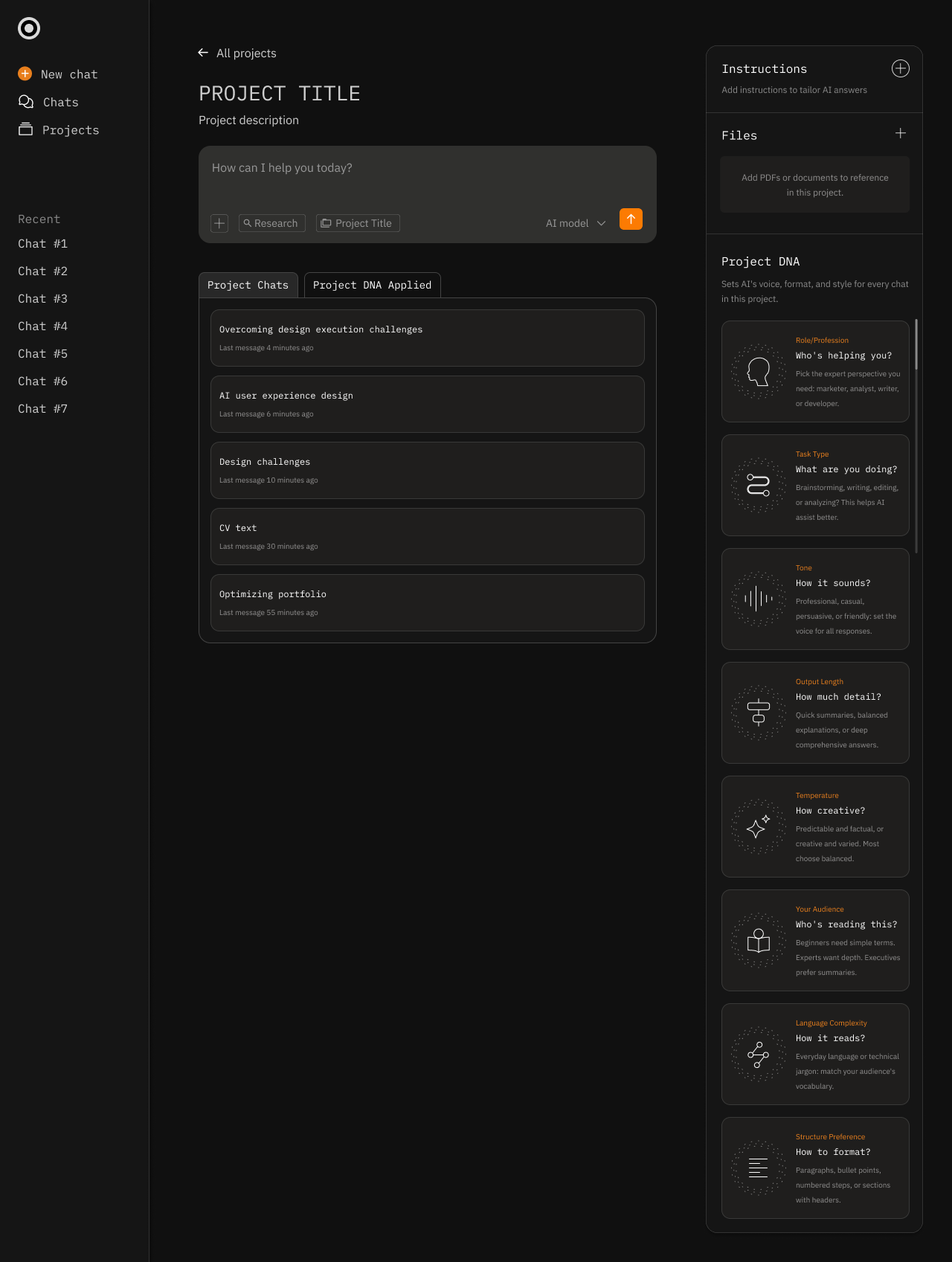

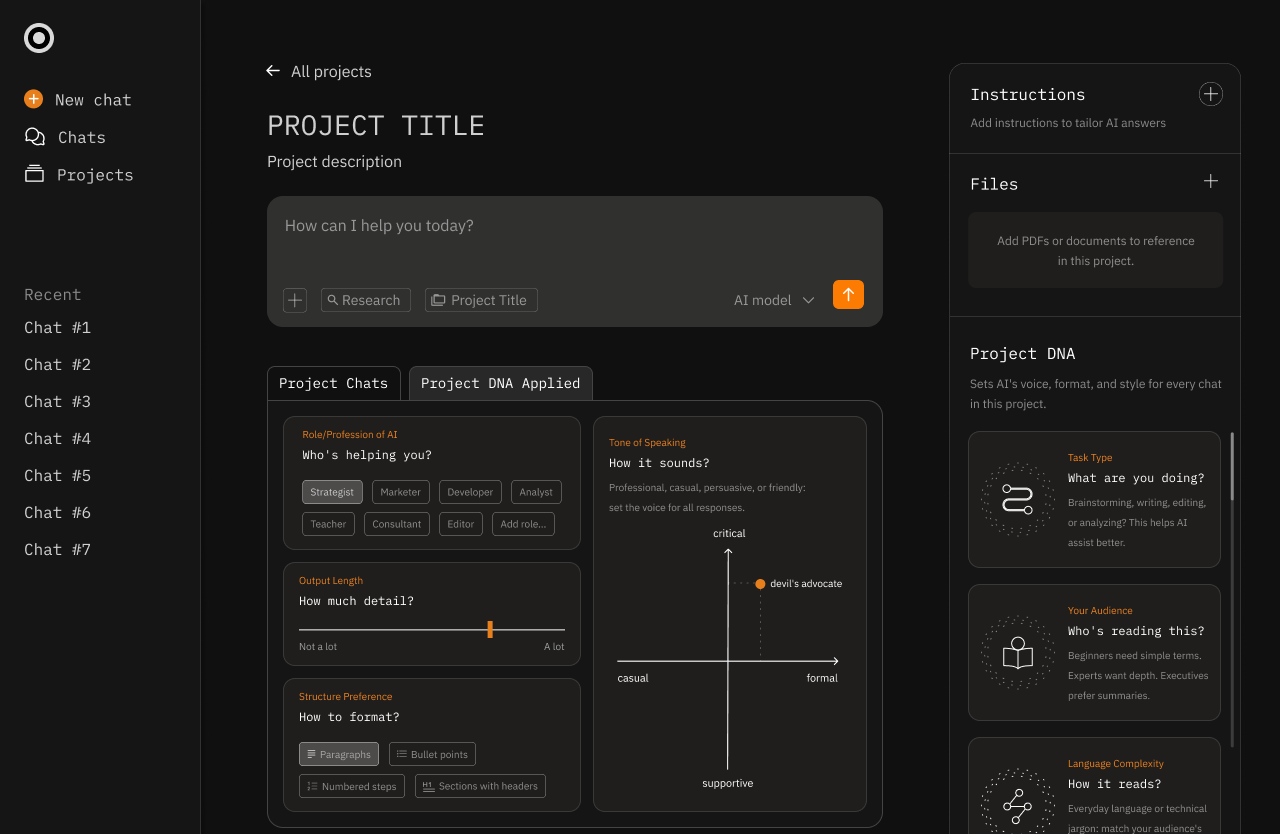

The Invictus Framework: Three Layers of Control

The framework emerged from watching how users naturally tried to interact with AI. Three layers, each serving a different moment in the workflow.

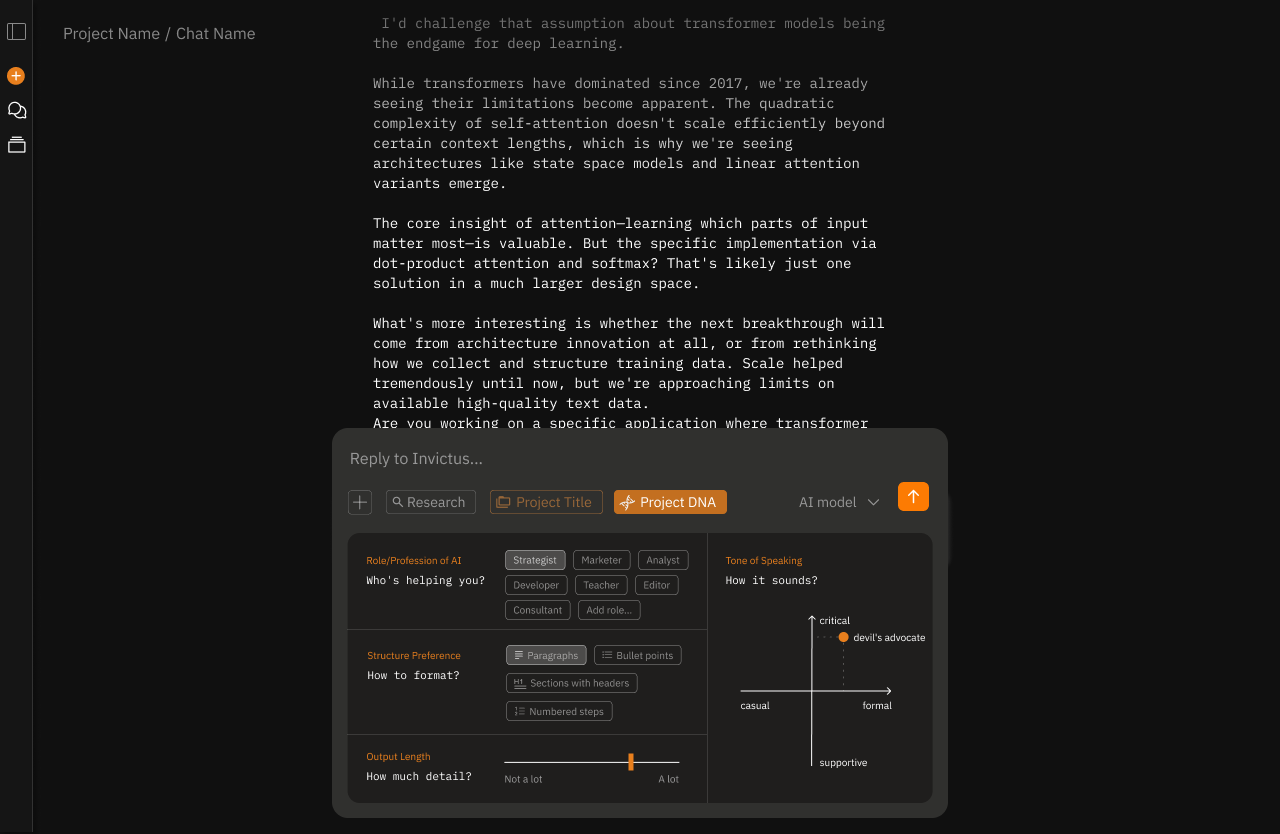

Layer 1: Project DNA – Set it and forget it

Persistent defaults that travel with you. Your role, tone, and format preferences. The AI remembers, so you don't repeat yourself in every prompt.

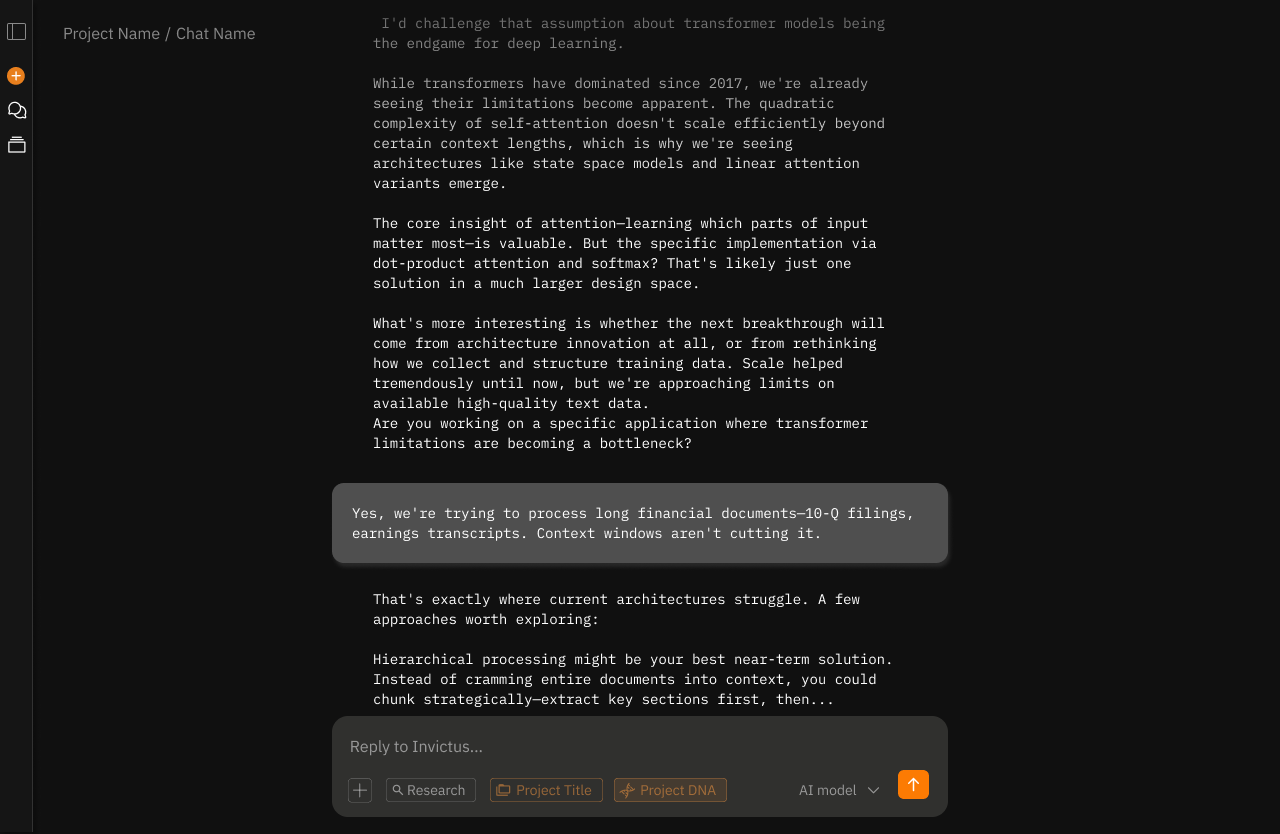

Layer 2: Prompt Controls – Override in the moment

Four quick adjustments above the chat: length, tone, task type, and format. When you need formal instead of casual, detailed instead of brief, without changing your defaults.

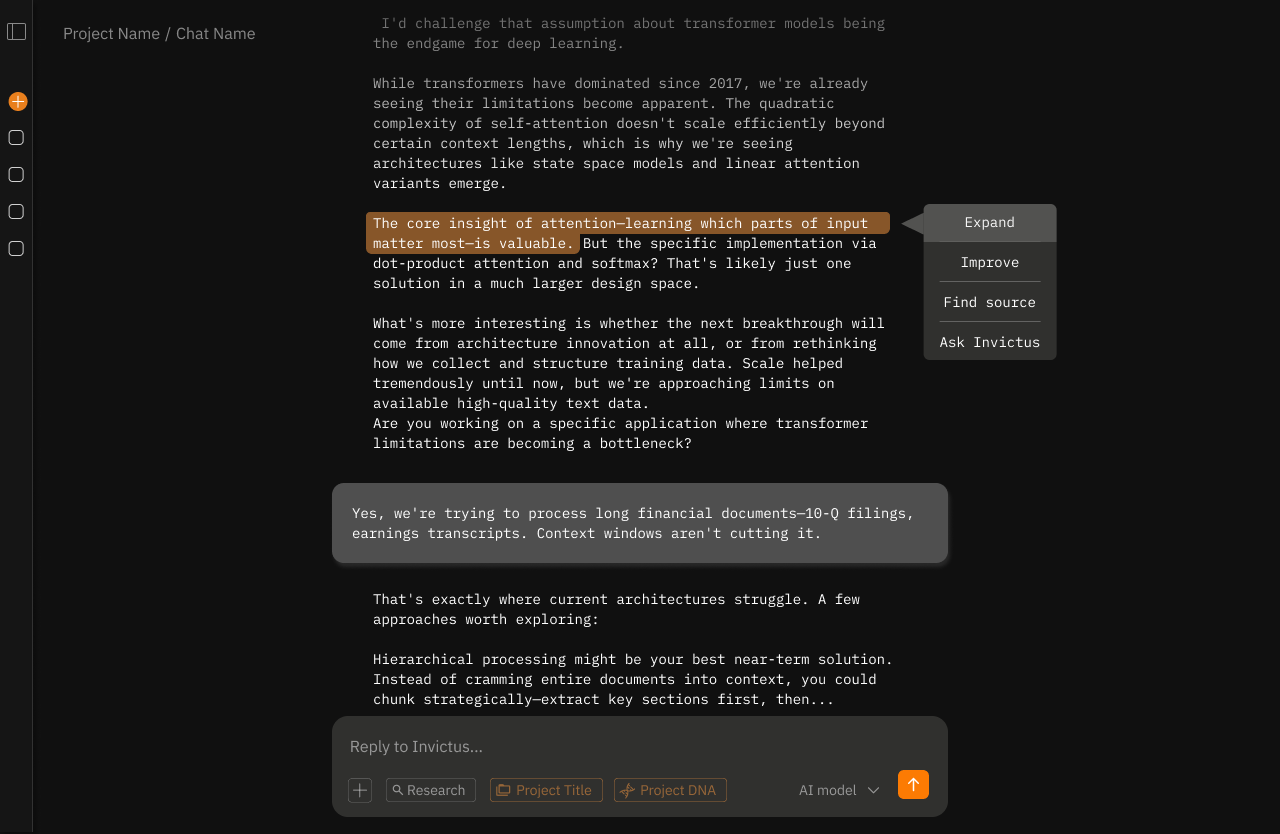

Layer 3: Section Editing – Refine without restarting

Select any part of the response and adjust it. Expand this section, improve that paragraph, find sources for this claim. No need to regenerate everything.

The logic: Most recent control wins. Prompt Controls override Project DNA. Section Edits override the original response. This matches how humans think: "I usually want X, but right now I need Y."

Why this works:

- Novices see a simple chat interface.

- Controls reveal themselves progressively as users engage.

- Power users get granular control without UI clutter.

- Everyone gets exactly the complexity they need, when they need it.